I copied the file into the Downloads directory on my Unbuntu laptop and then fired up the new MySQL Shell. After login, I created a new schema creatively named zips with session.createSchema('zips'). When then set the db object to this new schema with the command \use zips.

|

| Creating a new schema named 'zips' and then informing the system that I wish to use this new schema as the db object |

Now it is time to populate the schema with a collection for holding documents. The collection is named zip and is created with db.createCollection('zip') The next step is to read the zips.json file into the collection using Python

|

| We need to create a new collection named zip in the schema we just created and then switch to Python mode to read in the data line by line and store it as a document in the zip collection. |

You might want to go back and read the wonderful presentation and scripts by Giuseppe Maxia on loading MongoDB data into MySQL at https://github.com/datacharmer/mysql-document-store as he originated this very useful bit of code.

One thing that helps is that this data set has a _id field that the MySQL Document Store will grab and use as the InnoDB primary key. I will have to brush up on my Python to extract another value from data sets to use for the _id field.

And we can now perform NoSQL searches on the data.

|

| A NoSQL search of the data in the zip collection |

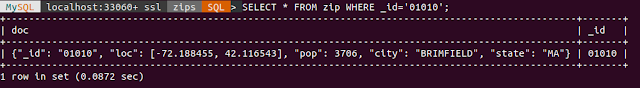

Or SQL. First we have to change to SQL node with \sql and then search for the same record with SELECT * FROM zip WHERE _id='01010';

|

| A SQL search of the data in the zip table |

If you have questions about porting data from MongoDB into the MySQL Document Store or the Document Store in general, please drop me a line.